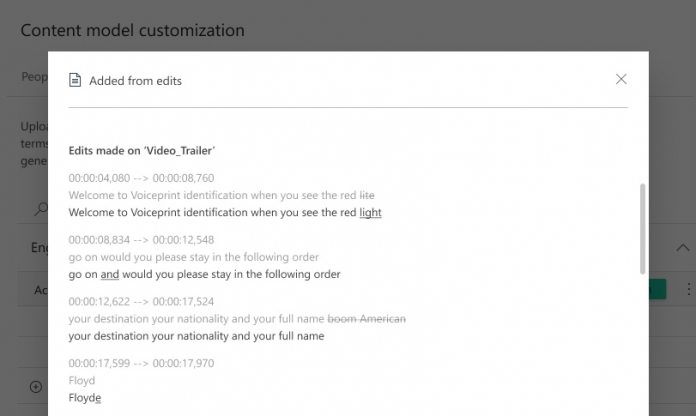

If you are unfamiliar with VI, it automatically creates metadata through user media. It achieves this by transcribing the video in any of 10 languages supported in Microsoft Translator. This allows the indexer to add subtitles to the file. VI also leverages facial recognition technology to detect all people in the video. If the person/s are well known enough, the app will assign a name tag. Alternatively, users can add the name themselves. Among the new tools available in the web app is the ability to capture manual transcript edits automatically. This can be achieved through Timeline, which will capture changes made to transcriptions in a text files and insert the information automatically into the language being used in the media. If a language model designated by the user is not in use, the new edited text will be placed in Account Adaptations, which is a new model in VI. Users are able to review the old and new versions of the media and choose to update their language model through the “Train” AI tool.

Improved API

Microsoft has also improved the “update video transcript” API in Video Indexer. The API can now automatically include manually uploaded transcript files with custom models: “As a part of the new enhancements, when a customer uses this API, Video Indexer also adds the transcript that the customers uploaded to the relevant custom model automatically in order to leverage the content as training material. For example, calling update video transcript for a video titled “Godfather” will result with a new transcript file named “Godfather” in the custom language model that was used to index that video.”